I’m Neale Ratzlaff, 🌲 🌲 a Deep Learning and Computer Vision researcher, working on Bayesian deep learning, with applications to computer vision and deep reinforcement learning

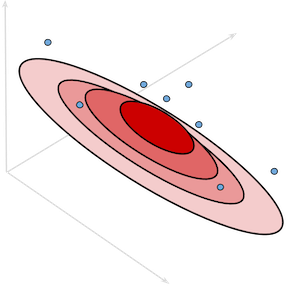

I did my PhD under Dr. Fuxin Li at Oregon State University. I’m broadly interested in variational inference with implicit distributions, generative models, uncertainty quantification, and information geometry. It’s important to create AI agents which we can trust, meaning I spend a lot of time thinking about alignment and paperclips.

Currently I’m interested in leveraging particle-based variational inference to create better Bayesian neural networks. We can learn these intractable distributions even for complex neural networks. The resulting distributions have numerous benefits over single models, and even bootstrapped ensembles.

I’ve worked at Horizon Robotics, Intel, and Tektronix

News

-

I successfully defended my dissertation on uncertainty quantification in deep learning with implicit distributions over neural networks

-

I accepted a research scientist position at HRL Laboratories in Malibu, CA.

Papers

Contrastive Identification of Covariate Shift in Image Data

Matthew L. Olson, Thuy-Vy Nguyen, Gaurav Dixit, Neale Ratzlaff, Weng-Keen Wong, Minsuk Kahng (IEEE VIS) 2021,

We design and evaluate a new visual interface that facilitates the comparison of the local distributions of training and test data. We conduct a quantitative user study on multi-attribute facial data to compare the learned latent representations of ImageNet CNNs vs. density ratio models, with two user analytic workflows.

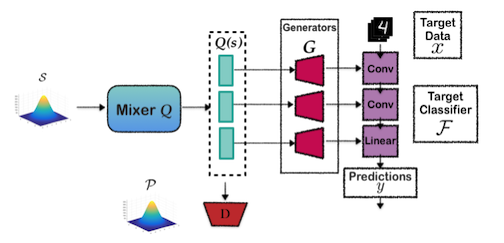

Generative Particle Variational Inference via Estimation of Functional Gradients

Ratzlaff☨, Bai☨, Fuxin, Xu. (ICML) 2021,

(☨): Equal Contribution

Code: coming very soon

Slides and Talk: ICML landing page

We introduce a new method for Bayesian deep learning: GPVI, that fuses the flexibility of particle-based variational methods, with the efficiency of generative samplers. We construct a neural sampler that is trained with the functional gradient of the KL-divergence between the empirical sampling distribution and the target distribution. We show that GPVI accurately models the posterior distribution when applied to density estimation and Bayesian neural networks. This work also features a new method for estimating the inverse of the input-output jacobian without invertibility restrictions.

Avoiding Side-Effects in Complex Environments.

Turner☨, Ratzlaff☨, Tadepalli. (NeurIPS) 2020, Spotlight talk

(☨): Equal Contribution

Code: Github Repo

We introduce reinforcement learning agents that can accomplish goals without incurring side effects. Standard RL agents collect reward at any cost, often unsustainably ruining the environment around them. The attainable utility penalty (AUP) penalizes agents for acting in a way that decreases in their ability to achieve unknown future goals. We extend AUP to the deep RL case, and show that our AUP agents can act in difficult environments with stochastic dynamics, without incurring side effects.

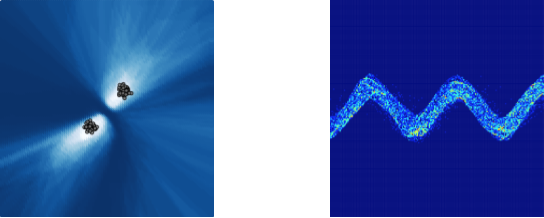

Implicit Generative Modeling for Efficient Exploration.

Ratzlaff, Bai, Fuxin, Xu. (ICML) 2020

We model uncertainty estimation as an intrinsic reward for efficient exploration. We introduce an implicit generative modeling approach to estimate a Bayesian uncertainty of the agent’s belief of theenvironment dynamics. We approximate the posterior through multiple draws from our generative model. The variance of the dynamic models’ output is used as an intrinsic reward for exploration. We design a training algorithm for our generative model based on amortized Stein Variational Gradient Descent, to ensure the parameter distribution is a nontrivial approximation to the true posterior.

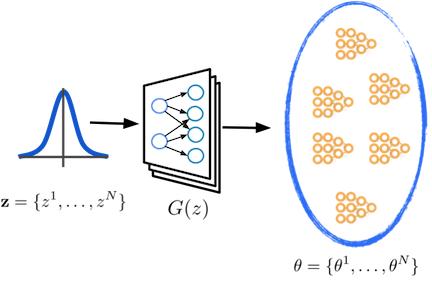

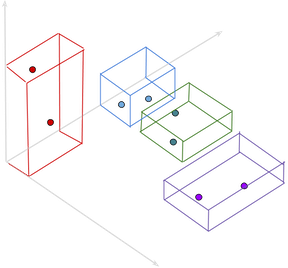

HyperGAN: A Generative Model for Diverse Performant Neural Networks.

Ratzlaff, Fuxin. (ICML) 2019

Code: HyperGAN Github repo

Talk: ICML Oral Slideshare

We learn an implicit ensemble using a neural generating network. Trained by maximum likelihood, the generator learns to sample from the posterior of model parameters which fit the data. The generated model parameters achieve high accuracy, yet are distinct with different predictive distributions. We enforce diversity by regularizing the intermediate representations to be well-distributed, while not harming the flexibility of the output distribution.

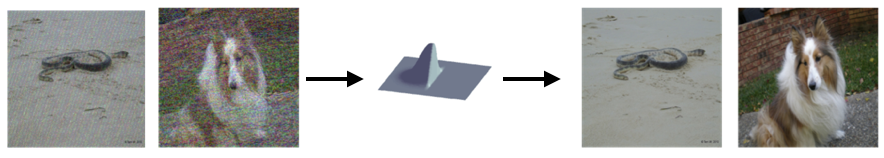

Unifying Bilateral Filtering and Adversarial Training for Robust Neural Networks.

Ratzlaff, Fuxin. (arxiv preprint) 2018

Code: Github repo

We use an adaptive bilateral filter to smooth the purturbations left by adversarial attacks. We view our method as a piecewise projection of the high frequency perturbations, back to the natural image manifold. Our method is simple, effective, and practical, unlike many other projection defenses

Theses

Methods for Detection and Recovery of Out-of-Distribution Examples. M.S. Degree Computer Science. Oregon State University (2018)

Code

I maintain and work on various other projects, all deep learning related. Pretty much all of them are in PyTorch.

- Adversarial Autoencoders

- Improved Wasserstein GAN

- Compositional Pattern Producing Networks

- Satisfiable Neural Networks (PySMT-DNN)

Generative Art

Inspired by Hardmaru, I build evolutionary creative machines. There is gallery of examples here